Article

Beyond Anecdotes: Balancing Qualitative and Quantitative Data for Smarter Product Decisions

March 14, 2025

The Danger of Relying Solely on Qualitative Feedback in Product Development

A few years ago, I had a leader who frequently said, “Anecdotes are a leaping-off point — NOT an endpoint.” At the time, I was a new Product Manager, and after running to Google to figure out what exactly an “anecdote” was, I started to reflect on what this advice meant for my role.

The saying stuck with me, especially as I tackled my first major project: redesigning our website. Initially, our goal was straightforward — modernize a decade-old interface that no longer adhered to accessibility standards. It was outdated, and we had a strong case for a refresh. But my leader challenged us to go deeper, asking how this redesign would impact our P&L and how we were validating the need for specific changes for the masses.

Without hesitation, I proudly explained that we planned to interview ten users to gather their feedback. My leader smiled and asked, “That’s great — but what about the millions of people who use the site annually?” That moment was pivotal. It highlighted a critical flaw in our approach: while qualitative feedback is valuable, relying solely on a few opinions wasn’t enough to justify such a significant financial investment. We needed to go deeper.

The Value of Scaling Insights

After conducting those initial interviews, we used the themes that emerged to design a broader survey for 5,000+ users. This approach allowed us to quantify both the spread and severity of the issues we uncovered, validating — or invalidating — our assumptions at scale.

For instance, in our interviews, most users expressed excitement about receiving gift cards as rewards through our website. Based on this feedback, our initial hypothesis was to keep this feature because the handful of initial users we spoke to liked it. However, when we surveyed thousands of users, we discovered that gift cards were a low priority for a majority of them. Instead, users overwhelmingly preferred direct cash rewards or rewards offering the most “bang for their buck.” This broader insight revealed that while gift cards resonated with a few, they didn’t meet the preferences of the larger user base.

By scaling our insights, we avoided over-investing in feedback from a vocal minority. This enabled us to redirect funds from the costly gift card program to higher cash payouts, delivering greater value to users and positively impacting our bottom line as well.

This experience taught me the importance of balancing qualitative and quantitative research methods. Qualitative feedback uncovers hidden insights and helps shape hypotheses, but it must be supported by quantitative data to ensure decisions are grounded in reality. We all have assumptions shaped by recency bias — or by prioritizing the feedback of “squeaky wheels.” By broadening our research to assess both the spread (how many users share an opinion) and severity (how impactful the issue is), we avoided supporting a costly feature that didn’t deliver significant value for the majority of users.

Avoiding the Anecdotal Trap (Again)

Fast forward a few years, I joined a new organization with more advanced data analytics capabilities than I had previously experienced. One tool, in particular, delivered daily user feedback directly to the various product team’s inboxes via email. While helpful, these anecdotal comments occasionally caused disruptions. For example, a user might say, “I can’t log in!” or “Your feature stinks,” and soon, my inbox would be flooded with stakeholders forwarding the same comments.

The most common issue involved users forgetting their passwords. While it’s normal for password-related queries to arise in support channels, the repeated forwarding of this feedback raised concerns within our team, which was responsible for the user experience. Was there something wrong with our sign-in process? Did we have a “high-priority” issue affecting a large number of users?

A deeper dive into system logs and analysis of error data at scale routinely showed that everything was working as intended. Rather than taking unsolicited meetings with stakeholders on the subject each week, this reinforced an important lesson: while individual feedback is worth exploring, quantitative data is essential to determine whether an issue affects the majority or just a vocal minority.

Each time an email chain triggered a fire drill, our team used the tools we had in place to monitor the user experience and ensure nothing significant was being overlooked. More often than not, there wasn’t a large-scale issue, and providing stakeholders with the necessary quantitative data allowed us to call off the fire drill.

Eventually, our data analytics team added a disclaimer to the daily feedback emails, reminding stakeholders that these comments reflected the perspective of a single user. This wasn’t intended to dismiss the feedback but to emphasize the importance of validating anecdotes with additional data before taking action.

Balancing Confidence with Action to Get Sh*t Done

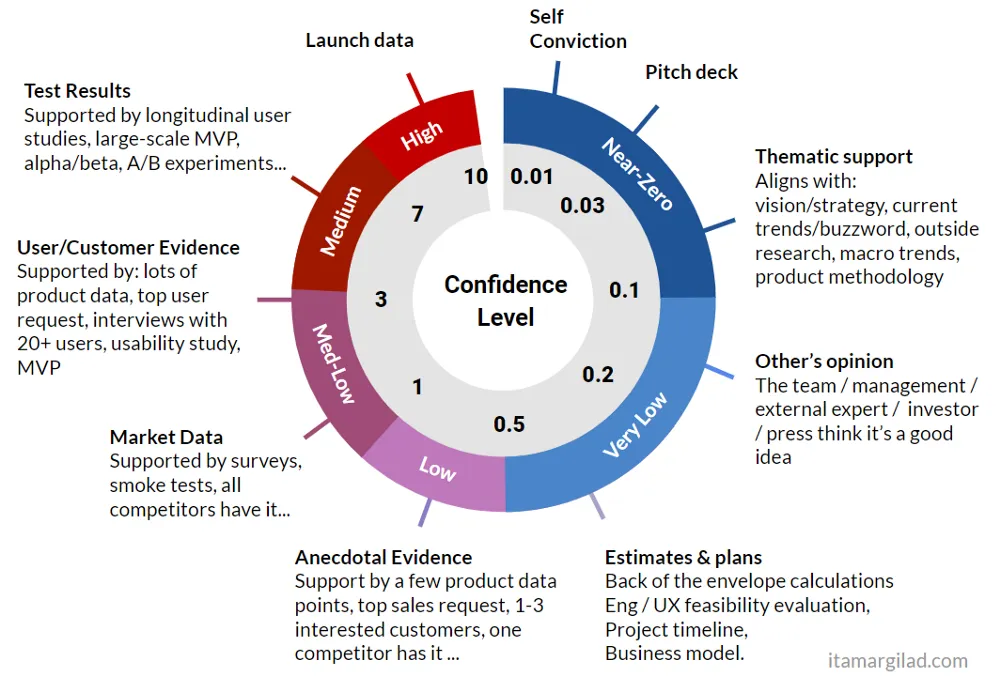

As I matured as a Product Manager, I became increasingly reliant on tools like Itamar Gilad’s Confidence Meter to help identify what types of data we had at the current time to support an investment decision. In product development, every decision — whether it’s prioritizing features or pursuing new ideas — requires confidence backed by data. Without it, teams risk chasing “shiny objects” that fail to deliver meaningful results. Product development is expensive, and businesses that don’t perform the necessary checks can find themselves months or years into a project that ultimately does not provide the expected value or meet a specific user need.

The Confidence Meter helps teams prioritize work based on their level of validation, ensuring that anecdotes don’t carry the same weight as several layers of data-backed insights. This framework became a vital part of my decision-making process as a Product Manager, helping our team avoid the pitfalls of low-reach, low-impact features. In a previous role, we followed the RICE prioritization technique to operationalize our backlog, scoring opportunities on an even playing field against one another. However, the scoring isn’t something to be done in a silo. The solution isn’t to simply hide behind your backlog or push back when there’s a lack of data. As a Product Manager, the key is to have open discussions with stakeholders about the current level of confidence, and to determine when it’s necessary to lean into additional upfront research and validation before allocating resources for hands-on keyboard development. I firmly believe that de-risking large bets before making them is always best practice.

In my current role, we have developed feedback features in our product that allow us to get answers at scale quickly. One example illustrates this well: A feature lacked sufficient behavioral analytics to guide decisions. We received reports through customer service and social media that a particular process in our mobile application was cumbersome and confusing. Users start a process within the product but would ultimately need to complete it outside the app, leading to frustration. Our team wanted to improve this process, so we started by polling users at scale within the app.

By doing this, we were able to engage with nearly 4,000 users who shared their thoughts and frustrations about the process. We then updated the experience in our app, adding more robust guidance and instructions. As a result, we saw a significant improvement in the key metrics associated with this process and identified additional pain points to address in a future iteration. Polling users at scale allowed us to go beyond isolated complaints in public channels and deeply understand the root causes of many users’ frustrations. This approach enabled us to generate actionable insights and iterate on the feature, ultimately driving better outcomes for both our users and our business.

Reiterating Why Both Qualitative and Quantitative Data Matter

Relying solely on qualitative feedback is like trying to complete a puzzle with only half the pieces. While anecdotes provide valuable context and humanize the user experience, they are inherently limited in scope. Without quantitative data, it’s impossible to understand the scale of an issue or opportunity.

Conversely, quantitative data alone can lack nuance. Numbers may tell you what’s happening, but they often don’t explain why. By combining both types of data, you can:

- Prioritize Effectively — Focus resources on initiatives with the greatest potential impact. Not just your pet or your leadership team’s pet.

- Generate Hypotheses — Use qualitative insights to uncover user “Opportunities” — otherwise known as pain points, needs, or desires.

- Validate at Scale — Test these hypotheses with quantitative data to ensure they represent the broader audience.

Closing Thoughts

If it’s not clear by now, I am a bit of a product perfectionist. I’d rather invest in ideas with a high likelihood of success than chase features that don’t move the needle. I’ve seen teams spiral and waste effort when they focus on low-reach, low-impact ideas, driven by the loudest voice in the room rather than validated, user-driven insights.

That’s why I now champion the mantra: “Anecdotes are a leaping-off point — NOT an endpoint.” While I haven’t committed to getting it tattooed just yet, I might one day. After all, it’s the Kool-Aid I’m still drinking.

At the same time, it’s important to acknowledge the risks of over-relying on quantitative data. Hiding behind numbers can create a disconnect from user value. By focusing solely on business metrics or behavioral analytics, we risk overlooking subtler user experiences or emerging trends that data alone cannot reveal.

Great product development is about balancing art and science. Anecdotes and qualitative feedback provide the art — the stories that help us empathize with users on a human level. Quantitative data is the science — the hard numbers that tell us where to focus. Together, they empower us to make tough decisions and deliver meaningful, scalable outcomes for both users and businesses.

The risks of failing to deliver results are costly. That’s why it’s worth spending just a little more time in the problem space before jumping into building something new and shiny.

What’s your approach to balancing qualitative and quantitative data? How do you determine the right amount of information needed to make a product development bet? Let’s connect and keep the conversation going!

Sean is a Product Manager at Livefront, honing product decision-making skills to drive impact.