Article

Moderated vs. Unmoderated User Testing and the Goldilocks Method That’s Just Right

April 8, 2025

Introduction

When it comes time to test a design or product with users, teams often face a classic dilemma: Should we do moderated or unmoderated testing? Moderated testing is great for complex ideas or exploratory concepts and provides meaningful insights, but it requires greater time and effort. Unmoderated testing is great for understanding usability and is usually quick and cost effective, but it can fall short for deeply understanding user behavior.

To use a storybook analogy, it’s a bit like Goldilocks and the Three Bears. One option can feel too hot (too costly and time intensive), while the other can feel too cold (too limiting and hands-off). However, much like Goldilocks’ preferred porridge, there’s a hybrid approach that could be just right.

Before exploring the hybrid approach, it’s helpful to first understand the differences between moderated and unmoderated user testing, as well as qualitative and quantitative data.

Moderated user testing (too hot)

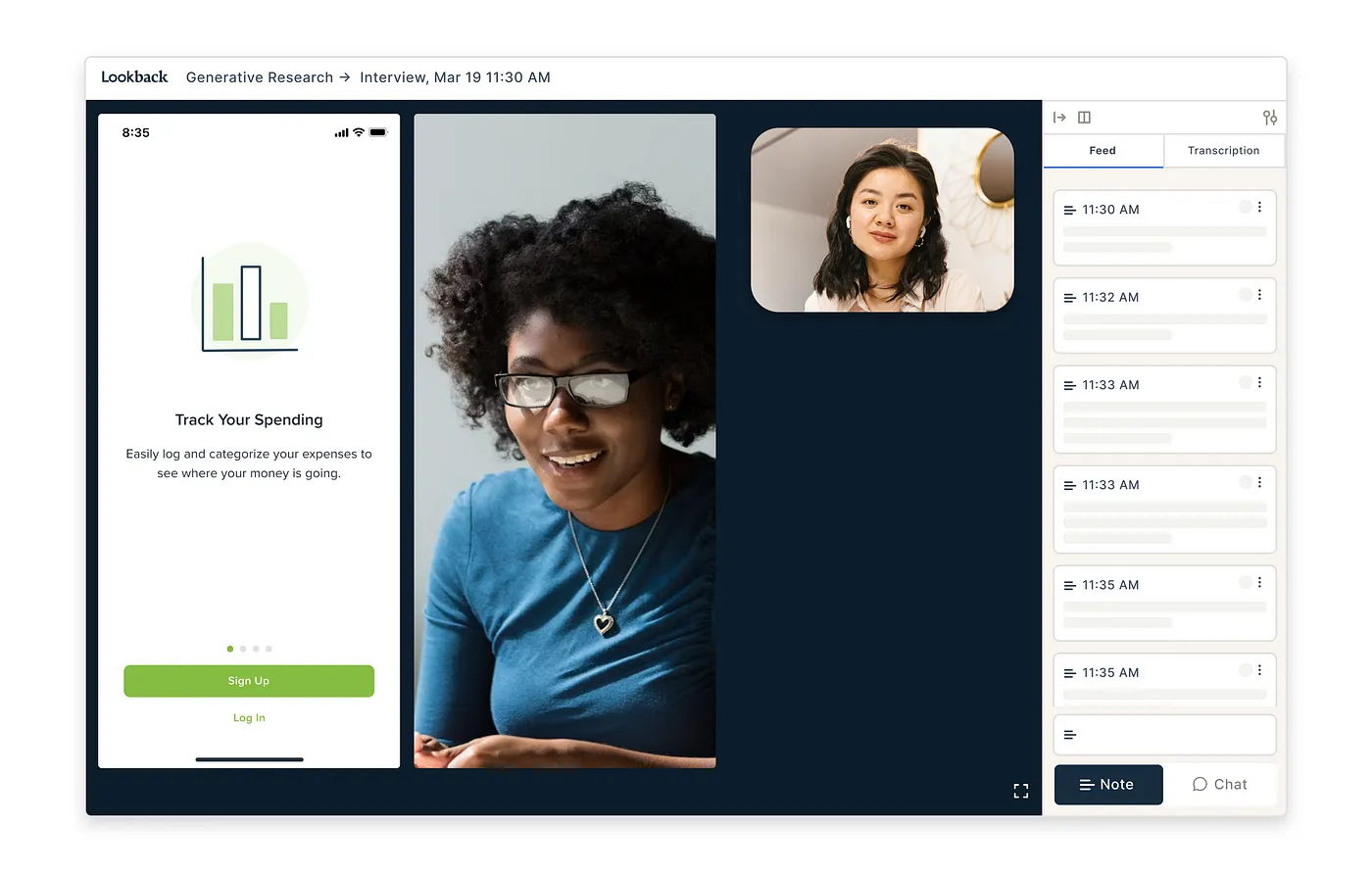

Moderated user testing is facilitated by a user researcher or someone on the team who guides the user through a task, prototype test, or set of questions. This testing can be conducted in-person or virtually through tools like Zoom, Teams, and Lookback.

Moderated user testing is inherently suited for collecting qualitative data through open-ended questions and task observation. Qualitative data includes non-numerical data such as feedback, opinions, feelings, and body language. This method of testing seeks to understand user behavior, pain points, and reasoning to answer why questions:

- “Why did you navigate to this screen first?”

- “Why do you prefer this option?”

- “Why did you expect that outcome?”

- Supports complex or exploratory ideas — This method is great for testing complicated designs or concepts that aren’t fully formed yet

- Unspoken feedback — Real-time interactions and user observations, like facial expressions, provide additional insights into user feedback

- Deeper insights — Moderators can ask specific and immediate follow-up questions to dig deeper into feedback or trains of thought

- Quality feedback — Moderator supervision minimizes the risk of a tester speeding through the test with low-quality input

- Time investment — Moderated testing requires an extensive time investment to coordinate, schedule, and conduct interviews and synthesize findings and notes

- Higher cost — Greater time investment drives operational costs, and longer tests require greater compensation for users’ time

- Observer bias — Users may alter their natural behavior due to being observed by a moderator, or they may provide more positive feedback to avoid hurting feelings or causing offense

Unmoderated user testing (too cold)

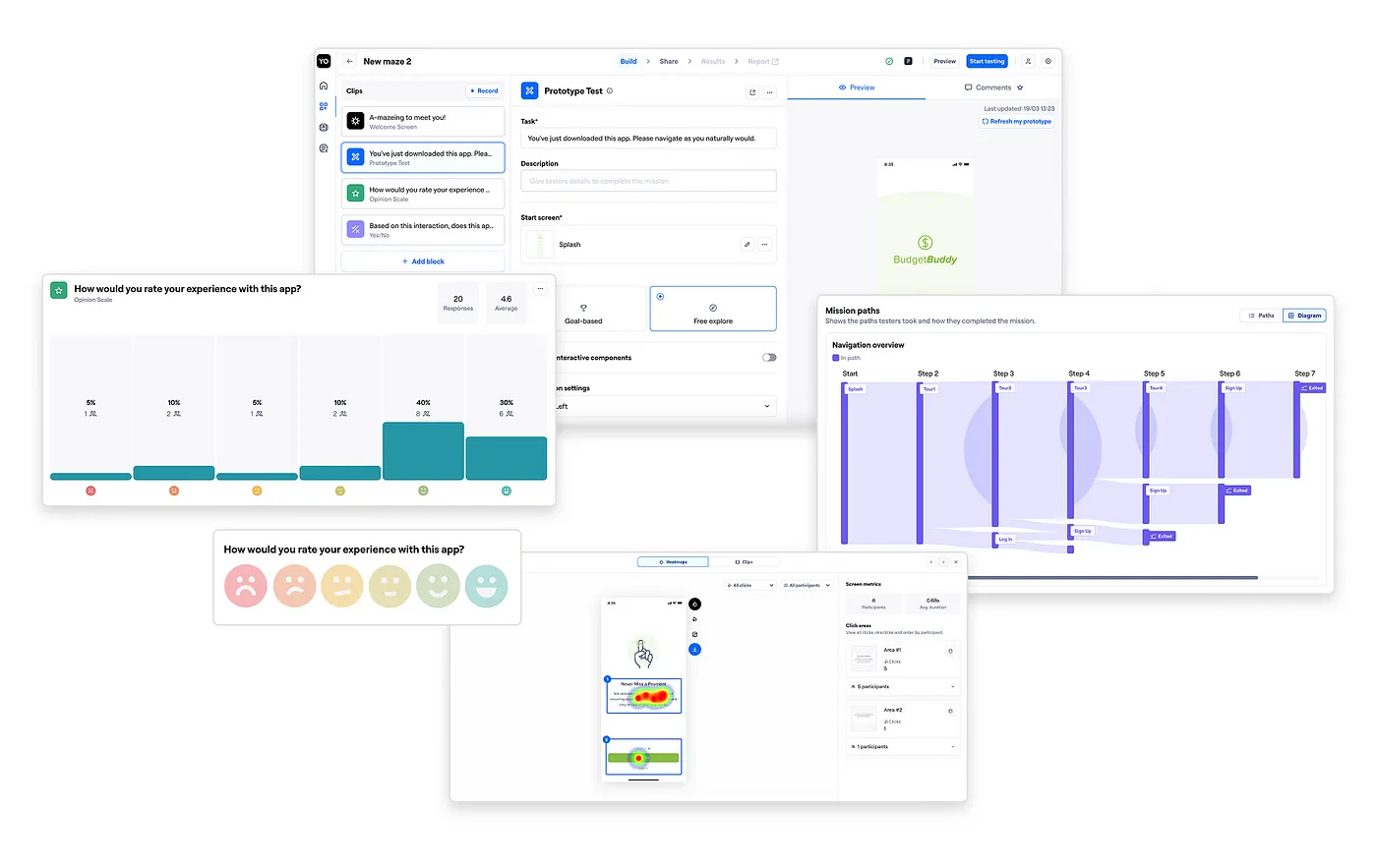

Unmoderated user testing is done asynchronously, which allows users to complete tests on their own schedule by a set deadline. These tests are made available through platforms such as Maze, UserTesting, and Hotjar, and survey platforms such as Survey Monkey and Qualtrics. Unmoderated tests typically involve an interactive prototype or visual, and some examples include A/B tests, click-tracking, and heat-mapping.

Traditionally, unmoderated user testing was best suited for collecting quantitative data. Quantitative data is numerical and measurable, such as success rates and time on task. This testing seeks to understand the effectiveness and usability of a design to answer how questions:

- “How did option A perform against option B?”

- “How long were users on this screen?”

- “How many users clicked here?”

- Scalable — Concepts can be tested quickly at high volume

- Cost effective — Shorter tests can require less compensation, and many testing tools offer cost-effective recruitment panels

- Unbiased behavior — Users may act more naturally and authentically when they are not being observed by a moderator

- User dependent — The quality of insights depends on the level of engagement and participation from your users

- Allows for ambiguity — Since there’s no interaction with users, there’s no ability to clarify responses or reasoning

Unmoderated qualitative user testing (just right)

While both methods have pros and cons, many testing tools have introduced new features in recent years to allow for the collection of qualitative insights through unmoderated tests. For example, some tools now allow testers to record their screen, audio, and video while asynchronously engaging with tests and prototypes, allowing them to give real-time feedback and reactions that your team can review and analyze later. By leveraging these new capabilities, it’s possible to channel your inner Goldilocks and achieve a hybrid approach that’s just right for many teams and testing scenarios.

- Fast and scalable — Because testing is done asynchronously, results can be gathered quickly and behind the scenes, often within 1–2 days

- Cost-effective — Unmoderated testing reduces recruitment and compensation costs compared to moderated testing

- Provides qualitative insights — Screen, video, and audio recordings collect real-time interactions and reactions

- Provides quantitative insights — Testing platforms collect data like clicks, time on task, and other key metrics

- User dependent — Like all unmoderated testing, the quality of insights depends on the level of engagement and participation from your users

- Tool dependent — Since there’s no interaction with users, the quality of feedback is dependent on the capabilities of the tool

- Allows for ambiguity — Audio recording allows users to add context to their actions, but without a moderator, there’s no ability to clarify responses or reasoning

One tool that I personally enjoy using for this hybrid approach is Maze. Maze offers some great features for umoderated qualitative testing:

- User recruitment panel — Participants can be filtered by demographics or screened through custom questionnaires to target specific user segments

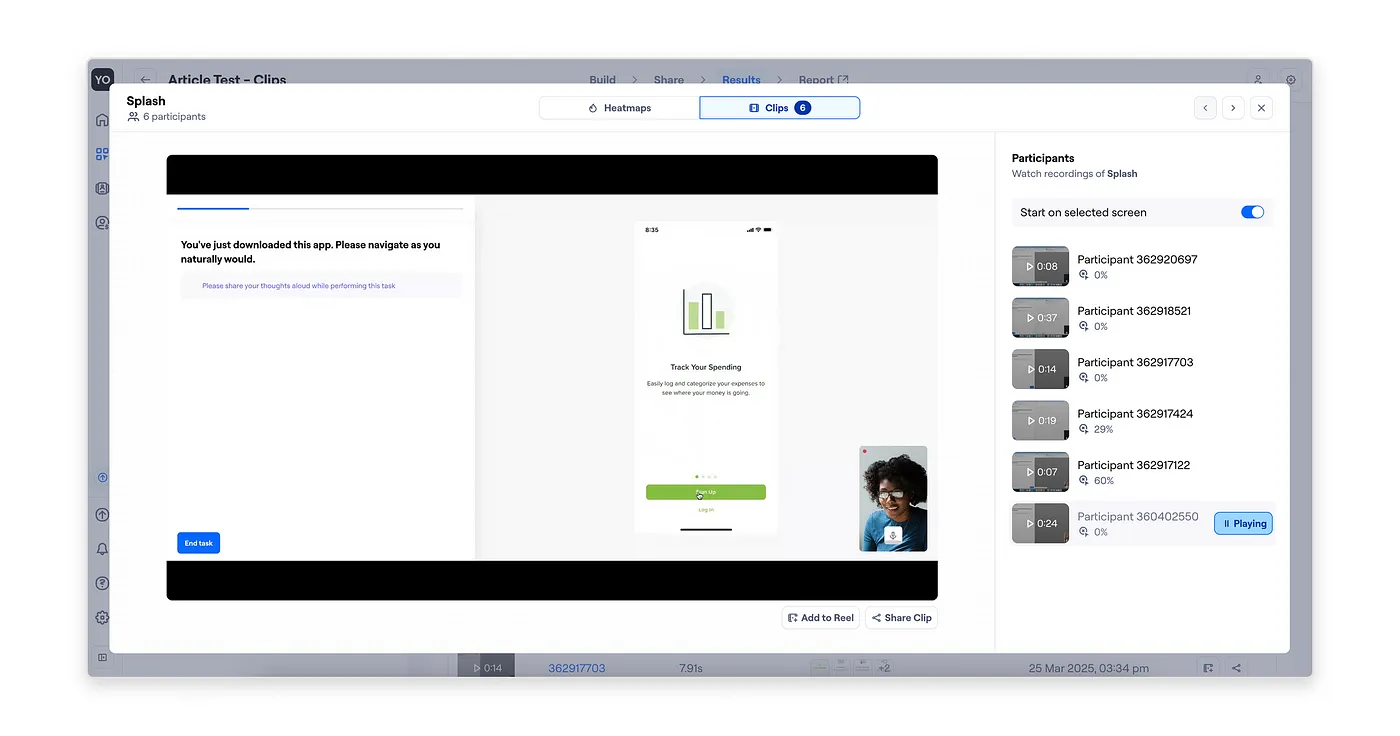

- Audio, video, and screen recording — Enabling Clips on your prototype tasks allows you to observe user behavior, reactions, and remarks without being present

- AI generated follow-up questions — Follow-up questions prompt users to provide more detail and reasoning on open-response questions, mitigating short and non-descriptive feedback

- AI generated theming and analysis — Results are automatically tagged into themes to speed analysis on open-response feedback

Maze also encourages quality feedback by allowing you to set audio, video, and screen sharing as a requirement for users to qualify for and start a test. Once started, users are given instructions to read the tasks aloud and share their first impressions and thoughts verbally as they go through the task. If no audio is detected, users will get a reminder to speak aloud, and if users stop sharing their microphone or camera during the test, it will be paused until they resume sharing.

The bear necessitiesAt the end of the day, moderated and unmoderated user testing are both great options depending on the questions you need to answer, your project’s timeline, and your budget. Unmoderated qualitative user testing is just another option, but can be a powerful hybrid method for many cases. Just remember, it doesn’t matter whether you prefer your porridge hot or cold, but skipping user testing altogether is the real bear!